As a market data provider, we’ve observed a trend emerging. In recent years, we started receiving many more requests from potential clients to handle the infrastructure components for them as well as the provision of market data itself. Ordinarily, the client would have to develop their own systems for delivering our data to their end users. This includes servers, network channels, firewalls and the like, as well as having to monitor and manage the changing loads on these systems.

As cloud technology has come of age and the software-as-a-service (SaaS) model has truly found its feet, businesses are becoming comfortable with outsourcing more crucial components of their businesses to specialists. These models have also led to a situation where smaller, more agile startups can effectively compete with larger incumbents without having to develop everything in-house, or having to employ a standing army of engineers to manage these systems.

At dxFeed, we piloted a number of projects that involved taking on the responsibilities of both infrastructure and data provision. Three of the biggest online brokerages and specialist depth-of-book trading software providers confirmed this was fertile ground for future growth. We went on to provide similar services to one of the biggest American banks, essentially creating, deploying, and managing the systems required to deliver our high-quality market data to all of their users. This involved installing their applications on our end, aggregating market data from a variety of exchanges, and delivering it via our new infrastructure rather than directly to our end clients.

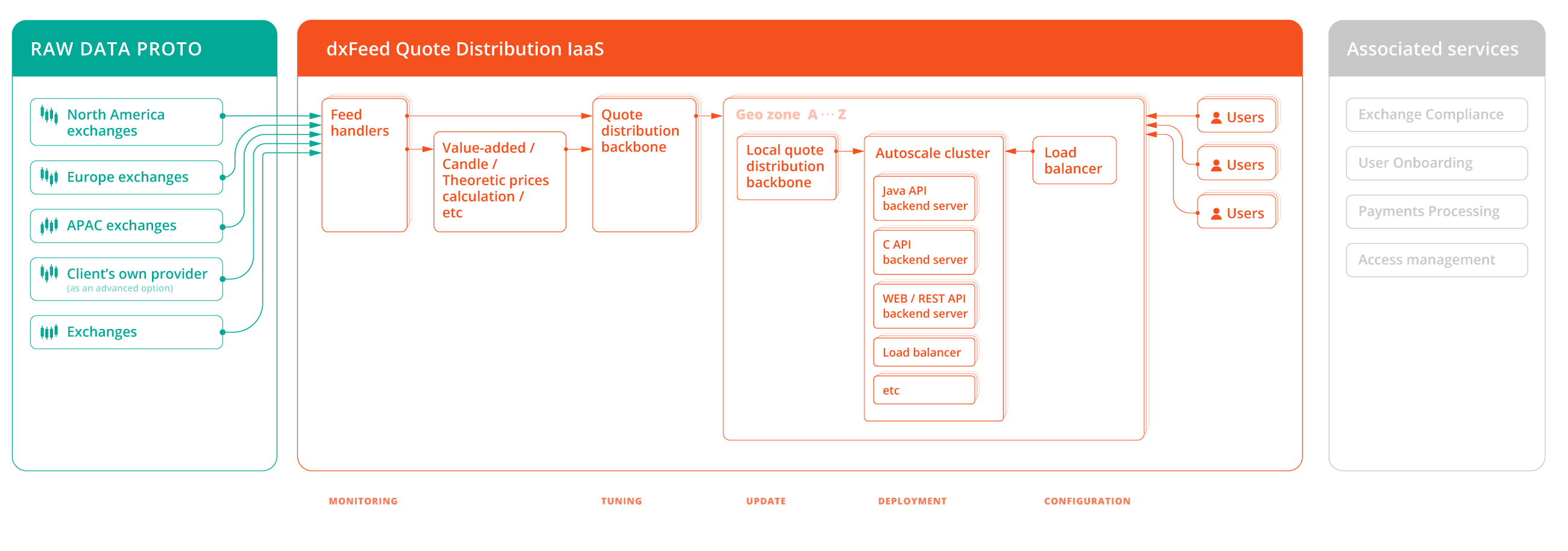

dxFeed’s IaaS client can concentrate on marketing and promotional activities, while we solve all of the technical problems related to the market data delivery. The end user connects to the client’s business as they normally would, they then receive authorization in the form of a token that provides them the permission to establish a secure connection to our systems. The data flows just as it ordinarily would. So the business doesn’t need to deal with the overheads associated with building, managing, and maintaining all that’s required to make all of the above take place.

On our side, we handle the systems, their capacity, data throughput, latency issues, and so on. We currently deploy in AWS and GCP clouds, generally being a cloud-agnostic provider for these needs, and our team is tasked with configuring the virtual servers, load balancers, and auto scale groups.

This allows usage instances to automatically scale according to the end client’s ever-changing needs. So, rather than drawing on more resources that are necessary at any given time, when usage increases (for usage read CPU, memory, network throughput, or an increase in the number of identical client sessions per server) more servers will automatically be spun up in order to serve this need.

Then, when usage decreases, the system can effectively downscale while maintaining sufficient capacity to serve clients with no noticeable changes in the level of service they’re accustomed to. As a backbone to all these performance optimizations, we have load balancers, which redirect new connections to servers with more excess capacity in order to reach an even distribution of connections across all available servers.

In a new project we’re currently developing for FXCM, a leading international provider of online foreign exchange, CFD trading and related services, our team is working to implement geographic routing, where we can run several clusters in Europe, the United States, and in Asia. Each is identical in its data feed availability and functionality, so the end users can automatically be redirected to the cluster that’s physically closest to them, based on their IP address.

In our proof-of-concept we began with everything running manually and dxFeed performing the monitoring and servicing of client needs in this way. Owing to the success of this proof-of-concept, we’ve since moved to make the switching automatic, using Terraform to automate the process of what gets spun up and when.

Now, as the system matures, we’re working to template the entire process in order to make it more accessible and affordable to smaller businesses. There is, of course, an unavoidable element of customization that’s necessary from one client to the next, but we’re becoming very adept at quickly formalizing these unique considerations for each and every new partner we work with.

These considerations include different auto-scaling requirements as a result of the different types of end users our clients may be serving. These clients can vary from power users where each cluster can only serve a limited number, to light clients on mobile where each cluster can serve a far greater number of concurrent sessions. The traffic characteristics of our clients’ end users can also vary greatly between venues, from users that are almost always connected to users that come and go in great numbers with comparatively short session times.

The great thing about this approach, from our perspective, is that each new implementation is yet another opportunity for us to enrich our general template of what these types of businesses require. Every time we have to make customizations to this general template in order to account for more edge cases and unique requirements, our Infrastructure-as-a-Service approach becomes smarter. Functionally, this means that every time we onboard a new client, we’re better equipped to serve their needs. This in-turn streamlines the entire process to deployment, allowing us to bring the solution to market more quickly, while also making it much more accessible to smaller businesses. The ultimate goal of this approach is to have the general dxFeed market data infrastructure-as-a-service template be comprehensive and fully configurable, so that new partners can easily select what they need from what we have available.

Who is all of this for?

We’re learning that the types of businesses this approach is attractive to are constantly growing in number. From startups looking to move fast and break things, to small established names who want to expand their offering, but for which the overheads associated with going it alone may not be viable. Also, we’re finding that even larger, well-known brands are recognizing that there are numerous competitive advantages to outsourcing this type of market data infrastructure to firms that specialize in this field. Finally, even those who have yet to be fully convinced are finding that since the pandemic, the supply chain constraints and resultant chip shortages have increased lead times to such an extent that an IaaS implementation may be the only feasible option in the short to medium term.

Learn more

Want to see what the system can do? Get in touch with our team for a more detailed demonstration.